- #Mediawiki export all pages install

- #Mediawiki export all pages code

I modified one to look for the shared secret instead of relying on the source IPs.

#Mediawiki export all pages install

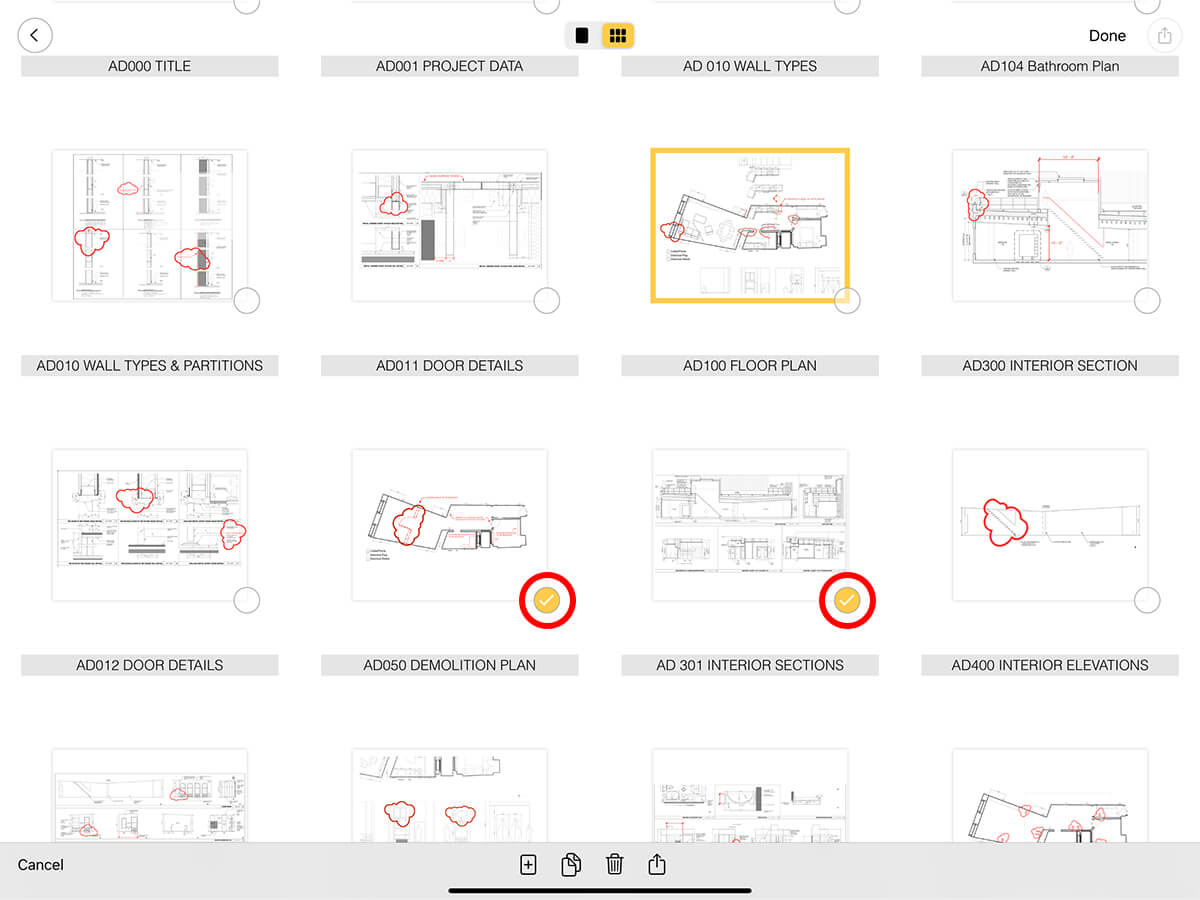

Install a github webhook PHP on the web server and configure it.It is not perfect, but covers links between the pages even with anchors and links to non-existing pages, images, thumbnails, and even supports authentication for dumping a protected wiki. I had to extend zim and the sourceviewer plugin so they can operate without the gui. I recently had the task to export a small MediaWiki to HTML. Install zim on the server and make sure it can do the export without the gui component.Push the source zim text files into a github repository.Write a bash script to do the export for all the files Move to the server.I had to extend/fix zim because it had issues with exporting (index/source code)

#Mediawiki export all pages code

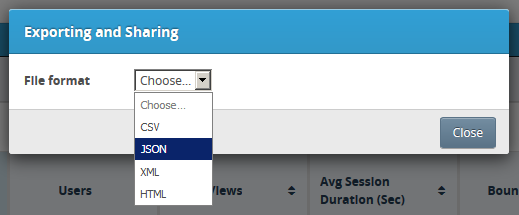

Grab a Mediawiki html code and convert it to a zim template so that the static site looks like mediawiki.  Run a post-processing for fixing converted files that pandoc is not suited to do (changing links from categories to tags for example) Create the static site locally. Run the extended pandoc to convert extracted mediawiki files into zimwiki format. Run the wiki extractor script, that I modified to extract individual files, WikiExtractor to individual files.py. If you lack such access, as can happen for instance if a wiki is abandoned by its site owner, then you will probably need to use workarounds. Export media wiki into an xml file by using the Special:Export page Exporting all the files of a wiki can be done in a few different ways: If you have FTP access to the wiki, then you can move the files by following the procedure at Manual:Moving a wiki. I built a native haskell module, thinking that the zimwiki syntax is close to DokuWiki that I might reuse the DokuWiki export code, where in reality it should have been done in a Lua external script. This took a bit longer than I expected, with quite a bit of coding to beat various tools into shape. GitHub can be used for editing the files directly on the web.

Run a post-processing for fixing converted files that pandoc is not suited to do (changing links from categories to tags for example) Create the static site locally. Run the extended pandoc to convert extracted mediawiki files into zimwiki format. Run the wiki extractor script, that I modified to extract individual files, WikiExtractor to individual files.py. If you lack such access, as can happen for instance if a wiki is abandoned by its site owner, then you will probably need to use workarounds. Export media wiki into an xml file by using the Special:Export page Exporting all the files of a wiki can be done in a few different ways: If you have FTP access to the wiki, then you can move the files by following the procedure at Manual:Moving a wiki. I built a native haskell module, thinking that the zimwiki syntax is close to DokuWiki that I might reuse the DokuWiki export code, where in reality it should have been done in a Lua external script. This took a bit longer than I expected, with quite a bit of coding to beat various tools into shape. GitHub can be used for editing the files directly on the web.

We are researching for other options to transfer the content from MediaWiki to modern SP pages directly if possible, but so far we haven't found any.

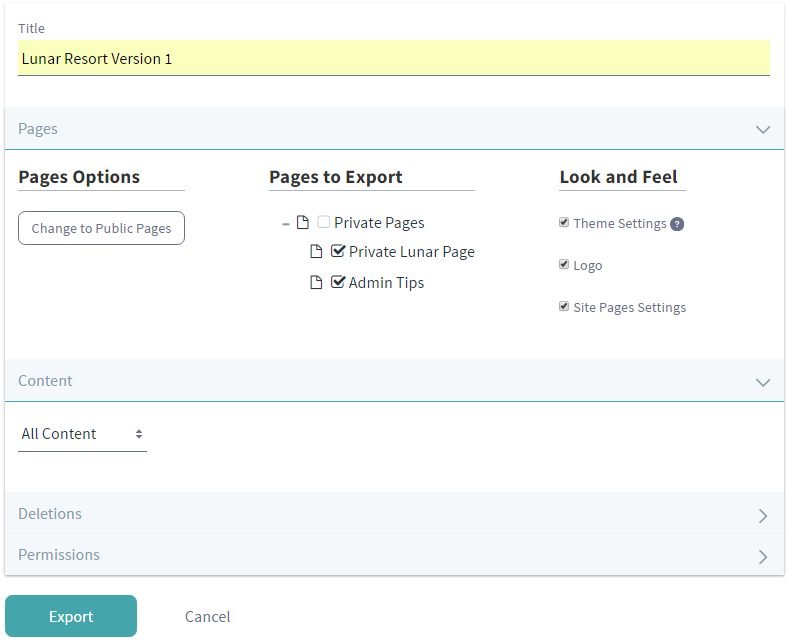

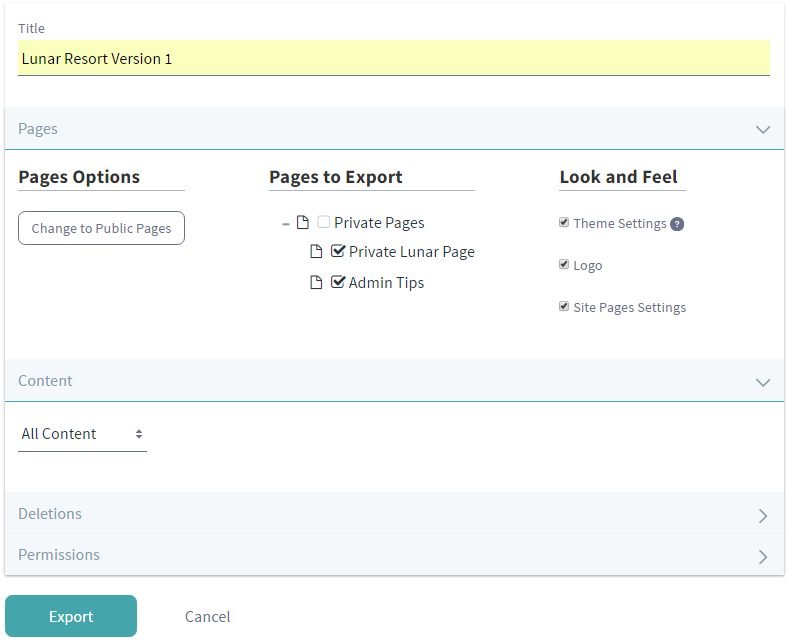

Have the web server notice change in the github repository and recreate the site, emailing the summary to me MediaWiki has a data transfer extension to export its content in XML format only. This way a web server and any number of remote systems can have a recent copy Have the text files version controlled with github. Store files locally in the zim wiki format as a part of the documents notebook. Security - Reduce attack vector, no more php, mysql or mediawiki plugins How it works. Simplicity - no need to remember, keep logins and anti-spam plugins, sql configs and mediawiki internals. Ease of use - edit text straight on the desktop with a desktop app, all text is up to date and available.

Moving off MediaWiki to a static site Why

0 kommentar(er)

0 kommentar(er)